Difference between revisions of "Speech synthesizers"

(a correction) |

(a correction) |

||

| Line 54: | Line 54: | ||

Digital speech synthesizers began to emerge in the 1980s, following the MIT-developed DECtalk text-to-speech synthesizer.<ref name="Klatt’s Last Tapes"> DUNCAN. Klatt’s Last Tapes: A History of Speech Synthesisers. Communication Aids [online]. 2013, Aug 10. Available online at: http://communicationaids.info/history-speech-synthesisers (Retrieved 2nd February, 2017).</ref> This synthesizer was notably used by the physicist Stephen Hawking.<ref name="DECtalk">DIZON, Jan. 'Zoolander 2' Trailer Is Narrated By Stephen Hawking's DECTalk Dennis Speech Synthesizer. Tech Times [online]. 2015, Aug 3. Available online at: http://www.techtimes.com/articles/73832/20150803/zoolander-2-trailer-narrated-stephen-hawkings-dectalk-dennis-speech-synthesizer.htm#sthash.hj3Hwq95.dpuf (Retrieved 2nd February, 2017).</ref> | Digital speech synthesizers began to emerge in the 1980s, following the MIT-developed DECtalk text-to-speech synthesizer.<ref name="Klatt’s Last Tapes"> DUNCAN. Klatt’s Last Tapes: A History of Speech Synthesisers. Communication Aids [online]. 2013, Aug 10. Available online at: http://communicationaids.info/history-speech-synthesisers (Retrieved 2nd February, 2017).</ref> This synthesizer was notably used by the physicist Stephen Hawking.<ref name="DECtalk">DIZON, Jan. 'Zoolander 2' Trailer Is Narrated By Stephen Hawking's DECTalk Dennis Speech Synthesizer. Tech Times [online]. 2015, Aug 3. Available online at: http://www.techtimes.com/articles/73832/20150803/zoolander-2-trailer-narrated-stephen-hawkings-dectalk-dennis-speech-synthesizer.htm#sthash.hj3Hwq95.dpuf (Retrieved 2nd February, 2017).</ref> | ||

| − | At the beginning of the modern-day speech synthesis, two main approaches appeared; | + | At the beginning of the modern-day speech synthesis, two main approaches appeared; articulatory synthesis tries to model the entire vocal tract of a human, and formant synthesis aims to create the sounds from which speech is made from scratch. However, both methods have been gradually replaced by concatenation synthesis. This form of synthesis uses a large set, a speech corpus, of high-quality pre-recorded audio samples. These samples can be assembled together and form a new utterance.<ref name="tihelka">TIHELKA, Daniel. The Unit Selection Approach in Czech TTS Synthesis. Pilsen. Dissertation Thesis. University of West Bohemia. Faculty of Applied Sciences. 2005.</ref> |

=== Purpose === | === Purpose === | ||

| − | The purpose of speech synthesis is to model, research, and create synthetic speech for applications where communicating information via text is undesirable or cumbersome. It is used in | + | The purpose of speech synthesis is to model, research, and create synthetic speech for applications where communicating information via text is undesirable or cumbersome. It is used in 'giving voice' to virtual assistants and in text-to-speech, most notably as a speech aid for visually impaired people or for those who have lost their own voice. |

== Important Dates == | == Important Dates == | ||

| − | * 1769 | + | * 1769: the first speech synthesizer was developed by Wolfgang von Kempelen |

| − | * 1770 | + | * 1770: Christian Kratzenstein unveiled his speaking machine |

| − | * 1837 | + | * 1837: Charles Wheatstone introduced von Kempelen's machine in England<ref name="explain"/> |

| − | * 1937 | + | * 1937: Voder was unveiled at the World's Fair in New York<ref name="Klatt’s Last Tapes"/> |

| − | * 1953 | + | * 1953: the first formant synthesizer PAT (Parametric Artificial Talker) was developed by Walter Lawrence<ref name="explain"/> |

| − | * 1984 | + | * 1984: DECtalk was introduced by Digital Equipment Corporation<ref>ZIENTARA, Peggy. DECtalk lets micros read massages over phones. InfoWorld. 1984, Jan 16. p. 21. Available online at: https://books.google.com.au/books?id=ey4EAAAAMBAJ&lpg=PA21&dq=DECtalk%20lets%20micros%20read%20messages%20over%20phone%5D%2C%20By%20Peggy%20Zientara&pg=PA21#v=onepage&q=DECtalk%20lets%20micros%20read%20messages%20over%20phone%5D%2C%20By%20Peggy%20Zientara&f=false (Retrieved 2nd February, 2017).</ref> |

== Enhancement/Therapy/Treatment == | == Enhancement/Therapy/Treatment == | ||

| − | + | Speech synthesis plays an important role in the development of [[Intelligent Personal Assistants|intelligent personal assistants]], which respond in a natural voice.<ref name="google"/> Daniel Tihelka also points out that speech synthesis could be beneficial when information should be retrieved in auditory fashion. It could also be used in interactive toys for children.<ref name="tihelka"/> Paul Taylor points out that speech synthesis is used in GPS navigation and call centres. It is also used in various systems for visually disabled people.<ref name="Taylor"/> Thierry Dutoit claims that speech synthesis could be used in language education.<ref name="High-quality text-to-speech"/> Speech synthesis is also used at present in applications that help people who have lost their voice.<ref name="whispers"/> | |

| − | At present, speech synthesis is able to preserve | + | At present, speech synthesis is able to preserve a patient's own voice when it is recorded before it is lost. However, the quality of the recording is still an issue. There are usually just a few days between patients being informed about the surgery and the surgery itself; therefore they cannot record a considerable number of sentences. In addition, patients tend to be in difficult psychological condition due to the diagnosis, and they are not trained speakers.<ref>JŮZOVÁ, M., TIHELKA, D., MATOUŠEK, J. Designing High-Coverage Multi-level Text Corpus for Non-professional-voice Conservation. In: Speech and Computer. Volume 9811 of the series Lecture Notes in Computer Science. Cham: Springer, 2016, pp 207-215. Doi: 10.1007/978-3-319-43958-7_24 Available online at: http://link.springer.com/chapter/10.1007/978-3-319-43958-7_24 (Retrieved 16th February, 2017).</ref> This issue could be overcome by a voice donor, i.e., by a person whose voice is not negatively affected by a disease and who has a similar voice as the patient, for instance his brother or her daughter.<ref>YAMAGASHI, Junichi. Speech synthesis technologies for individuals with vocal disabilities: Voice banking and reconstruction. Acoustical Science and Technology. 2012, 33(1), p. 1-5. Doi: 10.1250/ast.33.1 Available online at: https://www.jstage.jst.go.jp/article/ast/33/1/33_1_1/_article (Retrieved 16th February, 2017).</ref> |

| − | + | Speech synthesis could also be beneficial for students with various disorders, such as attention, learning, or reading disorders. It could also be used for learning a foreign language.<ref>The University of Kansas. Who can benefit from speech synthesis? The University of Kansas [online]. Available online at: http://www.specialconnections.ku.edu/?q=instruction/universal_design_for_learning/teacher_tools/speech_synthesis (Retrieved 16th February, 2017).</ref> | |

== Ethical & Health Issues == | == Ethical & Health Issues == | ||

| − | Jan Romportl claims that the more natural-sounding voice, which is produced | + | Jan Romportl claims that the more natural-sounding voice, which is produced by current speech synthesizers, might have not been entirely accepted due to the effect of 'uncanny valley'. The concept of uncanny valley, which was introduced by Masahiro Mori, argues that the natural-appearing artificial systems provoke negative feelings in humans. However, Romportl points out that his research demonstrates that there is a difference in the acceptance of natural-sounding synthetic voices between the participants which came from technical environments and those who had a background in the humanities. While the former group was more immune to uncanny valley, it occurred in the reactions of the latter group.<ref name="uncanny">ROMPORTL, Jan. Speech Synthesis and Uncanny Valley. In: Text, Speech, and Dialogue. Cham: Springer, 2014, p. 595-602. Doi: 10.1007/978-3-319-10816-2_72 Available online at: http://link.springer.com/chapter/10.1007/978-3-319-10816-2_72 (Retrieved 2nd February, 2017).</ref> In contrast, Paul Taylor claims that people are irritated by the unnatural-sounding voice of certain speech syntheses and prefer natural ones.<ref name="Taylor"/> |

| − | Certain users point out privacy issues of | + | Certain users point out privacy issues of applications for speech synthesis. This is primarily an issue for the apps that are free of charge.<ref name="whispers"/> |

| − | For more issues linked with speech technologies, see [[Speech Technologies | + | For more issues linked with speech technologies, see the [[Speech Technologies]] synopsis. |

== Public & Media Impact and Presentation == | == Public & Media Impact and Presentation == | ||

| − | The most renowned user of speech | + | The most renowned user of a speech synthesizer might be Stephen Hawking, who claims: <blockquote>This system allowed me to communicate much better than I could before. I can manage up to 15 words a minute. I can either speak what I have written, or save it to disk. I can then print it out, or call it back and speak it sentence by sentence. Using this system, I have written a book, and dozens of scientific papers. I have also given many scientific and popular talks. They have all been well received. I think that is in a large part due to the quality of the speech synthesiser, which is made by Speech Plus. One's voice is very important. If you have a slurred voice, people are likely to treat you as mentally deficient: Does he take sugar? This synthesiser is by far the best I have heard, because it varies the intonation, and doesn't speak like a Dalek. The only trouble is that it gives me an American accent.<ref>HAWKING, Stephen. Disability my experience with ALS. Hawking.or.uk [online]. Available online at: https://web.archive.org/web/20000614043350/http://www.hawking.org.uk/disable/disable.html (Retrieved 2nd February, 2017).</ref></blockquote> |

| − | + | Speech synthesis also allowed Roger Ebert, the Pulitzer Prize-winning critic, to speak again. Since Ebert's voice was preserved in many records, the speech synthesis company Cereproc could reconstruct his own voice.<ref>MILLAR, Hayley. New voice for film critic Roger Ebert. BBC News [online]. 2010, Mar 3. Available online at: http://news.bbc.co.uk/2/hi/uk_news/scotland/edinburgh_and_east/8547645.stm (Retrieved 16th February, 2017).</ref> | |

| − | + | Speech synthesis appears also in culture. A renowned example of speech synthesis is HAL, a speaking computer that plays an important role in Stanley Kubrick's film ''2001: A Space Odyssey''. The story was based on Arthur C. Clarke's novel.<ref name="explain"/> | |

== Public Policy == | == Public Policy == | ||

| − | We have not recorded any public policy | + | We have not yet recorded any public policy in regard to speech syntheses. |

== Related Technologies, Projects or Scientific Research == | == Related Technologies, Projects or Scientific Research == | ||

| − | The speech synthesis apps are | + | The speech synthesis apps are dependent on smartphones, tablets, or PCs.<ref name="whispers"/> |

== References == | == References == | ||

Revision as of 09:50, 10 May 2017

List of speech synthesizers entries:

Speech synthesis is the method of generating artificial speech by mechanical means or by a computer algorithm. The software can read texts aloud and should be able to create new sentences.[1] Consequently, it is used when there is a need to express information acoustically. Nowadays, it is found in text-to-speech applications (screen text reading, assistance for the visually impaired) and virtual assistants (GPS navigation, mobile assistants such as Google Now or Apple Siri). In addition, it is employed in any other situation where information usually available in text has to be communicated acoustically. It often comes paired with voice recognition.[2]

These applications require speech that is intelligible and natural-sounding. Current speech synthesis' systems achieve a great deal of naturalness compared to a real human voice. Yet they are still perceived as non-human because minor audible glitches still remain in the outputted utterances.[3] It may be that modern speech synthesis has reached the state of 'uncanny valley', where the closeness of the artificial speech is so near perfection that humans find it unnatural.[4]

Contents

Main Characteristics

Speech synthesizers create synthetic speech by various methods. First, they are used to mimic how the human vocal tract works and how the air passes through it as in the case of articulatory synthesis. Second, synthesizers are used to manipulate sounds to create the basic building blocks of speech in formant synthesis. Finally, synthesizers assemble new utterances from a large database of pre-recorded audio samples in concatenation synthesis.[5]

The speech synthesizer can be a device, such as DECtalk, which is used by Stephen Hawking,[6] or it can be an app for smartphones, which is more widespread at present.[7]

Articulatory Synthesis

Articulatory synthesis is the oldest form of speech synthesis. It tries to mimic the entire human vocal tract and the way the air passes through it when speaking. Modern synthesizers of this kind are based on the acoustic tube model, which is what the formant synthesis uses. But compared to those models, articulatory synthesis uses the entire system as the parameter and the entire tubes as the controls. This allows the creation of very complex models, but with increasing complexity the difficulty of effectively tracking the behaviour of the innards of the system decreases, because a small change in the tube settings propagates into complex speech patterns. But this also makes articulatory synthesis attractive, because researchers do not need to model complex formant trajectories explicitly. In other words, artificial speech is produced by controlling the modelled vocal tract and not by controlling the produced sound itself.[8]

This synthesis simulates the airflow through the vocal tract of a human. Unlike formant synthesis, the entire modelled system is controlled as a whole, as it does not comprise connected, discrete modules. By changing the characteristics of the components and, tubes of the articulatory system, different aspects of the produced voice can be achieved.[9]

Articulatory synthesis deals with two inherent problems. First, the resulting model must find a balance between being precise and complex and being simplistic but observable and controllable. Because the vocal tract is very complex, models mimicking it very closely are inherently very complicated, and this makes them difficult to track and control. This is connected to a second problem. It is important to base the model of the vocal tract on data as precisely as possible. Modern screening methods such as MRI or X-rays are used to see the human vocal tract and its working when producing speech. This data is then used for construction of the articulatory model, but methods such as these are often intrusive, and data from them are difficult to deduce.[9]

Both these obstacles diminish the quality of the speech produced by these models when compared to modern methods, and articulatory synthesis was largely abandoned for the purposes of speech synthesis. It is still used, however, for research of the human vocal tract physiology, phonology, or visualisation of the human head when speaking.[9]

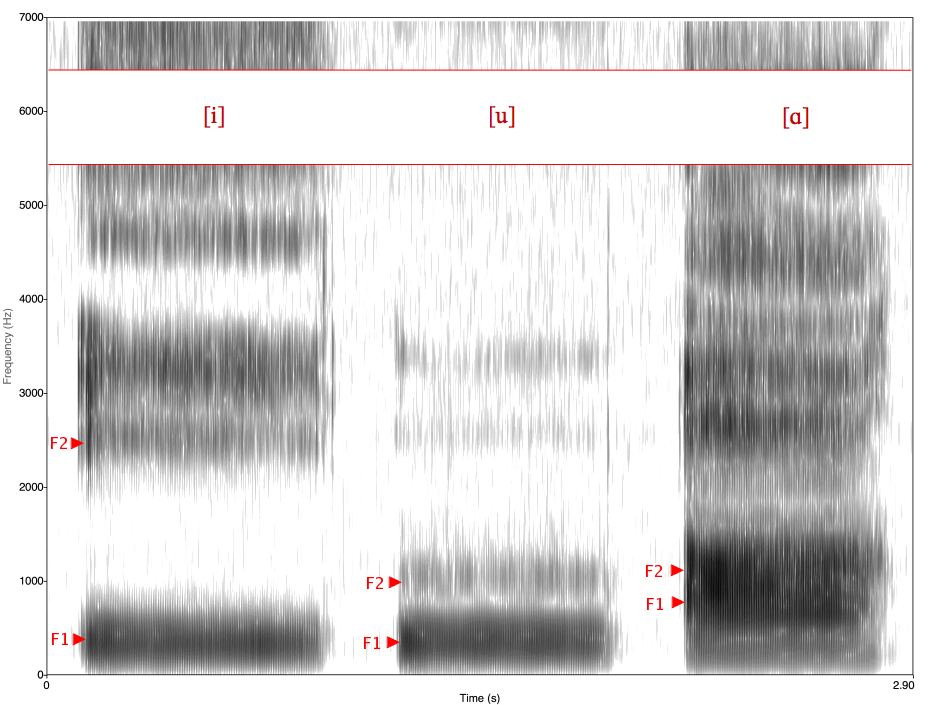

Formant Synthesis

Formant synthesis is the first truly crystallized method of synthesizing speech. It used to be the dominant method of speech synthesis until the 1980s. Some call it a synthesis 'from scratch' or 'synthesis by rule'.[9] In contrast to the more recent approach of concatenation synthesis, formant synthesis does not use sampled audio. Instead, the sound is created from scratch. The result is intelligible, clear-sounding speech, but listeners immediately notice that is was not spoken by a human. The model is too simplistic and does not accurately reflect the subtleties of a real human vocal tract, which consists of many different articulations working together.[5]

Formant synthesis uses a modular, model-based approach to create the artificial speech. Synthesizers utilizing this method rely on an observable and controllable model of an acoustic tube. This model typically has a layout of a vocal tract with two systems functioning in parallel. The sound is generated from a source and then fed to a vocal tract model. The vocal tract is modelled so the oral and nasal cavities are separate and parallel, but the sound only passes through one of them depending on the type (i.e., nasalized sounds) of sounds needed at the moment. Both cavities are combined on the output and pass through a radiation component that simulates the sound characteristics of real lips and nose.[9]

However, this method does not represent an accurate model of the vocal tract because it allows separate and independent control over every aspect of the individual formants. But in a real vocal tract, the formant is constructed as a whole by the entire system. It is not possible to highlight one part of it and base an artificial model on it.[9]

Concatenation Synthesis

Concatenation synthesis is a form of speech synthesis that uses corresponding short samples of previously recorded speech to construct new utterances. These samples vary in length, ranging between 1 second to several milliseconds. These small samples are subsequently modified so they better fit together according to the type, structure, and mainly the contents of the synthesized text.[3] These samples, or units, can be individual phones, diphones, morphemes, or even entire phrases. So instead of changing the characteristics of the sound to generate intelligible speech, concatenation synthesis works with a large set of pre-recorded data from which, much like a building set, it builds utterances that originally were not present in the data set.[10]

The usage of real recorded sounds allows the production of very high-quality artificial speech. This is advantageous in comparison to formant synthesis, as we do not require approximate models of the entire vocal tract. However, some speech characteristics, such as voice character or mood, cannot be modified as easily.[3] A unit placed in a not very ideal position can result in audible glitches and unnaturally sounding speech because the contours of the unit do not perfectly fit together with the surrounding units. This problem is minimal in domain-specific synthesizers, but general models require large data sets and additional output control that detects and repairs these glitches.[9]

First, a data set of high quality audio samples needs to be recorded and divided into units. The most common type of unit is the diphone, but other larger or smaller units can also be used. The set of such units creates the speech corpus. A diphones inventory can be artificially created[9] or based on many hours of recorded speech produced by one speaker,[3][9] these being either spoken monotonously, as to create units with neutral prosody, or spoken naturally. The corpus is then segmented into discrete units. Segments such as silence or breaths are also marked and saved.

The speech corpus prepared in this way is then used for construction of new utterances. A large database, or in other words, a large set of units with varied prosodic and sound characteristics, should safeguard more natural-sounding speech.[5] In unit selection synthesis, one of the approaches that concatenation synthesis uses, then the target utterance is first predicted, suitable units are picked from the speech corpus, assembled, and their suitability towards the target utterance is tested.[11]

Historical overview

Although the first attempts to build a speaking machine appeared in antiquity and Middle Ages, the first speech synthesizers were developed in the beginning of the modern era. The history of speech synthesis dates back to the 18th century, when Hungarian civil servant and inventor Wolfgan von Kempelen created a machine of pipes and elbows and assorted parts of musical instruments. He achieved a sufficient imitation of the human vocal tract with a third iteration. He published a comprehensive description of the design in his book Mechanismus der menschlichen Sprache nebst der Beschreibung seiner sprechenden Maschin [The Mechanism of Human Speech, with a Description of a Speaking Machine] in 1791.[12] Christian Kratzenstein, a Danish scientist, who worked in Russia, introduced his speaking machine at the same time. However, it could produce only five vowels. Von Kempeln's speaking machine was popularised by Charles Wheatstone in 1837.[13]

The design was picked up by Sir Charles Wheatstone, a Victorian-Era English inventor, who improved on Kempelen's design. The newly sparked interest into the research of phonetics and Whetstone's work inspired Alexander Graham Bell to do his own research into the matter and eventually arrive at the idea of the telephone. Bell laboratories created the Voice Operating Demonstrator, or the Voder for short, a human speech synthesizer, in the 1930s. The Voder was a model of a human vocal tract, basically an early version of a formant synthesizer, and required a trained operator who had to manually create utterances using a console with 15 touch-sensitive keys and a pedal.[12]

Digital speech synthesizers began to emerge in the 1980s, following the MIT-developed DECtalk text-to-speech synthesizer.[14] This synthesizer was notably used by the physicist Stephen Hawking.[6]

At the beginning of the modern-day speech synthesis, two main approaches appeared; articulatory synthesis tries to model the entire vocal tract of a human, and formant synthesis aims to create the sounds from which speech is made from scratch. However, both methods have been gradually replaced by concatenation synthesis. This form of synthesis uses a large set, a speech corpus, of high-quality pre-recorded audio samples. These samples can be assembled together and form a new utterance.[3]

Purpose

The purpose of speech synthesis is to model, research, and create synthetic speech for applications where communicating information via text is undesirable or cumbersome. It is used in 'giving voice' to virtual assistants and in text-to-speech, most notably as a speech aid for visually impaired people or for those who have lost their own voice.

Important Dates

- 1769: the first speech synthesizer was developed by Wolfgang von Kempelen

- 1770: Christian Kratzenstein unveiled his speaking machine

- 1837: Charles Wheatstone introduced von Kempelen's machine in England[13]

- 1937: Voder was unveiled at the World's Fair in New York[14]

- 1953: the first formant synthesizer PAT (Parametric Artificial Talker) was developed by Walter Lawrence[13]

- 1984: DECtalk was introduced by Digital Equipment Corporation[15]

Enhancement/Therapy/Treatment

Speech synthesis plays an important role in the development of intelligent personal assistants, which respond in a natural voice.[2] Daniel Tihelka also points out that speech synthesis could be beneficial when information should be retrieved in auditory fashion. It could also be used in interactive toys for children.[3] Paul Taylor points out that speech synthesis is used in GPS navigation and call centres. It is also used in various systems for visually disabled people.[9] Thierry Dutoit claims that speech synthesis could be used in language education.[1] Speech synthesis is also used at present in applications that help people who have lost their voice.[7]

At present, speech synthesis is able to preserve a patient's own voice when it is recorded before it is lost. However, the quality of the recording is still an issue. There are usually just a few days between patients being informed about the surgery and the surgery itself; therefore they cannot record a considerable number of sentences. In addition, patients tend to be in difficult psychological condition due to the diagnosis, and they are not trained speakers.[16] This issue could be overcome by a voice donor, i.e., by a person whose voice is not negatively affected by a disease and who has a similar voice as the patient, for instance his brother or her daughter.[17]

Speech synthesis could also be beneficial for students with various disorders, such as attention, learning, or reading disorders. It could also be used for learning a foreign language.[18]

Ethical & Health Issues

Jan Romportl claims that the more natural-sounding voice, which is produced by current speech synthesizers, might have not been entirely accepted due to the effect of 'uncanny valley'. The concept of uncanny valley, which was introduced by Masahiro Mori, argues that the natural-appearing artificial systems provoke negative feelings in humans. However, Romportl points out that his research demonstrates that there is a difference in the acceptance of natural-sounding synthetic voices between the participants which came from technical environments and those who had a background in the humanities. While the former group was more immune to uncanny valley, it occurred in the reactions of the latter group.[4] In contrast, Paul Taylor claims that people are irritated by the unnatural-sounding voice of certain speech syntheses and prefer natural ones.[9]

Certain users point out privacy issues of applications for speech synthesis. This is primarily an issue for the apps that are free of charge.[7]

For more issues linked with speech technologies, see the Speech Technologies synopsis.

Public & Media Impact and Presentation

The most renowned user of a speech synthesizer might be Stephen Hawking, who claims:

This system allowed me to communicate much better than I could before. I can manage up to 15 words a minute. I can either speak what I have written, or save it to disk. I can then print it out, or call it back and speak it sentence by sentence. Using this system, I have written a book, and dozens of scientific papers. I have also given many scientific and popular talks. They have all been well received. I think that is in a large part due to the quality of the speech synthesiser, which is made by Speech Plus. One's voice is very important. If you have a slurred voice, people are likely to treat you as mentally deficient: Does he take sugar? This synthesiser is by far the best I have heard, because it varies the intonation, and doesn't speak like a Dalek. The only trouble is that it gives me an American accent.[19]

Speech synthesis also allowed Roger Ebert, the Pulitzer Prize-winning critic, to speak again. Since Ebert's voice was preserved in many records, the speech synthesis company Cereproc could reconstruct his own voice.[20]

Speech synthesis appears also in culture. A renowned example of speech synthesis is HAL, a speaking computer that plays an important role in Stanley Kubrick's film 2001: A Space Odyssey. The story was based on Arthur C. Clarke's novel.[13]

Public Policy

We have not yet recorded any public policy in regard to speech syntheses.

Related Technologies, Projects or Scientific Research

The speech synthesis apps are dependent on smartphones, tablets, or PCs.[7]

References

- ↑ 1.0 1.1 DUTOIT, Thierry. High-quality text-to-speech synthesis: an overview. Journal of Electrical & Electronics Engineering [online]. 1997. Available online at: http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=E773C6478622AEE97E0E678AE3E3B285?doi=10.1.1.495.9289&rep=rep1&type=pdf (Retrieved 7th February, 2017).

- ↑ 2.0 2.1 Google. Speech Processing. Research.google.com [online]. Available online at: https://research.google.com/pubs/SpeechProcessing.html (Retrieved 7th February, 2017).

- ↑ 3.0 3.1 3.2 3.3 3.4 3.5 TIHELKA, Daniel. The Unit Selection Approach in Czech TTS Synthesis. Pilsen. Dissertation Thesis. University of West Bohemia. Faculty of Applied Sciences. 2005.

- ↑ 4.0 4.1 ROMPORTL, Jan. Speech Synthesis and Uncanny Valley. In: Text, Speech, and Dialogue. Cham: Springer, 2014, p. 595-602. Doi: 10.1007/978-3-319-10816-2_72 Available online at: http://link.springer.com/chapter/10.1007/978-3-319-10816-2_72 (Retrieved 2nd February, 2017).

- ↑ 5.0 5.1 5.2 ROMPORTL, Jan. Zvyšování přirozenosti strojově vytvářené řeči v oblasti suprasegmentálních zvukových jevů. Pilsen. Dissertation Thesis. University of West Bohemia. Faculty of Applied Sciences. 2008.

- ↑ 6.0 6.1 DIZON, Jan. 'Zoolander 2' Trailer Is Narrated By Stephen Hawking's DECTalk Dennis Speech Synthesizer. Tech Times [online]. 2015, Aug 3. Available online at: http://www.techtimes.com/articles/73832/20150803/zoolander-2-trailer-narrated-stephen-hawkings-dectalk-dennis-speech-synthesizer.htm#sthash.hj3Hwq95.dpuf (Retrieved 2nd February, 2017).

- ↑ 7.0 7.1 7.2 7.3 WebWhispers.org. Text to speech apps for Phones and Pads. WebWhispers.org [online]. 2017. Available online at: http://www.webwhispers.org/library/TexttoSpeechApps.asp (Retrieved 16th February, 2017).

- ↑ BIRKHOLZ, Peter. About Articulatory Speech Synthesis. VocalTractLab [online]. 2016. Available online at: http://www.vocaltractlab.de/index.php?page=background-articulatory-synthesis (Retrieved 2nd February, 2017).

- ↑ 9.00 9.01 9.02 9.03 9.04 9.05 9.06 9.07 9.08 9.09 9.10 TAYLOR, Paul. Text-to-Speech Synthes. University of Cambridge Department of Engineering [online]. 2014. Available online at: http://mi.eng.cam.ac.uk/~pat40/ttsbook_draft_2.pdf (Retrieved 2nd February, 2017).

- ↑ BLACK, Alan W. Perfect Synthesis for all of the people all of the time. Carnegie Mellon School of Computer Science [online]. 2009, Sep 30. Available online at: http://www.cs.cmu.edu/~awb/papers/IEEE2002/allthetime/allthetime.html (Retrieved 2nd February, 2017).

- ↑ CLARK, A. J. KING, Simon. Joint Prosodic and Segmental Unit Selection Speech Synthesis. The Centre for Speech Technology Research [online]. 2006. Available online at: http://www.cstr.ed.ac.uk/downloads/publications/2006/clarkking_interspeech_2006.pdf (Retrieved 2nd February, 2017).

- ↑ 12.0 12.1 DUDLEY, Homer, TARNOCZY, T. H. The speaking machine of Wolfgang von Kempelen. Journal of the Acoustical Society of America 22, 151-166. Doi:10.1121/1.1906583. Available online at: http://pubman.mpdl.mpg.de/pubman/item/escidoc:2316415:3/component/escidoc:2316414/Dudley_1950_Speaking_machine.pdf (Retrieved 2nd February, 2017).

- ↑ 13.0 13.1 13.2 13.3 WOODFORD, Chris. Speech synthesizers. EXPLAINTHATSTUFF [online]. 2017, Jan 21. Available online at: http://www.explainthatstuff.com/how-speech-synthesis-works.html (Retrieved 16th February, 2017).

- ↑ 14.0 14.1 DUNCAN. Klatt’s Last Tapes: A History of Speech Synthesisers. Communication Aids [online]. 2013, Aug 10. Available online at: http://communicationaids.info/history-speech-synthesisers (Retrieved 2nd February, 2017).

- ↑ ZIENTARA, Peggy. DECtalk lets micros read massages over phones. InfoWorld. 1984, Jan 16. p. 21. Available online at: https://books.google.com.au/books?id=ey4EAAAAMBAJ&lpg=PA21&dq=DECtalk%20lets%20micros%20read%20messages%20over%20phone%5D%2C%20By%20Peggy%20Zientara&pg=PA21#v=onepage&q=DECtalk%20lets%20micros%20read%20messages%20over%20phone%5D%2C%20By%20Peggy%20Zientara&f=false (Retrieved 2nd February, 2017).

- ↑ JŮZOVÁ, M., TIHELKA, D., MATOUŠEK, J. Designing High-Coverage Multi-level Text Corpus for Non-professional-voice Conservation. In: Speech and Computer. Volume 9811 of the series Lecture Notes in Computer Science. Cham: Springer, 2016, pp 207-215. Doi: 10.1007/978-3-319-43958-7_24 Available online at: http://link.springer.com/chapter/10.1007/978-3-319-43958-7_24 (Retrieved 16th February, 2017).

- ↑ YAMAGASHI, Junichi. Speech synthesis technologies for individuals with vocal disabilities: Voice banking and reconstruction. Acoustical Science and Technology. 2012, 33(1), p. 1-5. Doi: 10.1250/ast.33.1 Available online at: https://www.jstage.jst.go.jp/article/ast/33/1/33_1_1/_article (Retrieved 16th February, 2017).

- ↑ The University of Kansas. Who can benefit from speech synthesis? The University of Kansas [online]. Available online at: http://www.specialconnections.ku.edu/?q=instruction/universal_design_for_learning/teacher_tools/speech_synthesis (Retrieved 16th February, 2017).

- ↑ HAWKING, Stephen. Disability my experience with ALS. Hawking.or.uk [online]. Available online at: https://web.archive.org/web/20000614043350/http://www.hawking.org.uk/disable/disable.html (Retrieved 2nd February, 2017).

- ↑ MILLAR, Hayley. New voice for film critic Roger Ebert. BBC News [online]. 2010, Mar 3. Available online at: http://news.bbc.co.uk/2/hi/uk_news/scotland/edinburgh_and_east/8547645.stm (Retrieved 16th February, 2017).